The Best AI Detectors for ChatGPT 3 and 4, Bard and More

AI content creation has swiftly become one of the most controversial issues of our time. Amidst ongoing fights over ethics, copyright, sourcing, and more, there has also been a ton of misinformation about it, how it works, and how it can be used.

This has led to a lot of people taking sides:

- On the one hand, you have the tech folks, heavy proponents of various AI systems. Some of these people are often the same groups of people who were big into NFTs and Crypto; any way they can find to disrupt an industry and, more importantly, profit from it. Others are simply businessmen, developers, or casual users.

- On the other hand, you have the writers and artists whose work is taken and used to train the statistical models used in AI without compensation or credit. They might feel threatened by AI as software is being trained to do their job. Other artists and writers see it as a blessing, and it may actually be helping them do their job more efficiently.

I’m not here to get into the ethical debate, but it’s important to acknowledge the debate exists because it brings our topic into tight focus: how to recognize and detect AI-generated content.

First, though, why would you want to be able to detect AI-generated content?

Why Detecting AI Content is Important

Telling the difference between AI content and human content is important for a few reasons.

First, some people are staunchly against AI-generated content, to the extent that they’ll boycott or otherwise cease using your business because of it. Since some of these people consider AI use unethical – at least currently, the way AI is trained based on broad libraries of theft with no credit, payment, or opt-out – if the content you produce is detectable as AI content, it will get you on these peoples’ blacklist.

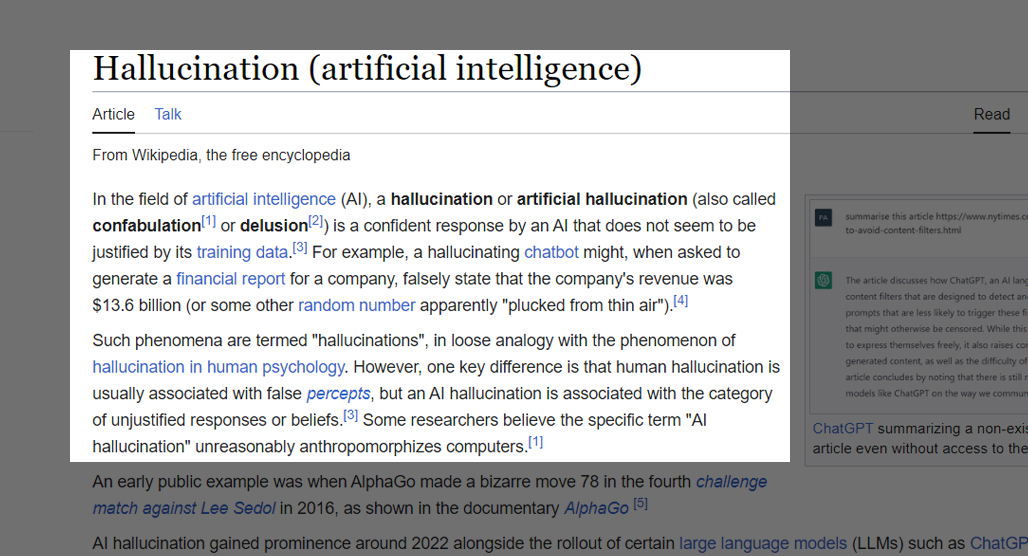

Second, there’s an important detail about how AI works and, more importantly, how it doesn’t work: current-generation content AIs don’t have any concept of fact. If you ask an AI a question and it generates an answer, that answer will be delivered with confidence, regardless of whether or not it’s actually true. “Hallucinations” are the technical term for this. We’re already seeing examples of this in very concerning places, like the lawyers who used ChatGPT to create a case brief, where it hallucinated precedent, much to the dismay of everyone involved.

This is important because if a piece of content is detectable as AI, the possibility of hallucinations exists. We know that a big part of modern-day SEO is E-A-T, the Expertise, Authoritativeness, and Trustworthiness of content and its author. Well, if an AI generated the content, there’s no E-A-T to it.

The third reason it’s important to detect AI content is how AI works under the hood. Now, I’m going to be vastly simplifying here, but you can think of it this way. When you’re typing on your phone to send a text or message, your keyboard software will suggest words that are likely to be the next word in a sentence. Language model AIs like ChatGPT, Bard, Bing, and so on, are like that, but on steroids. They have much greater training, much more information with which to build a statistical model, and so on. But they’re still the same concept: a prediction of what words will come next.

Critically, this means that for a given prompt, a language model AI is going to generate the statistically most likely response, with some fuzziness depending on various parameters of the prompt (like specifying tone, voice, character, and so on.)

If you and a competitor both ask a language model AI to generate a piece of content on the same topic using substantially similar prompts, the resulting pieces of content are going to look pretty similar. They won’t be identical – they won’t even be close enough to trigger something like Copyscape – but they’ll very likely have similar phrasing, similar details, similar structures, and so on.

How AI Content Detectors Work

This is a good lead-in to a discussion of how AI detectors work.

There are a few different ways that AI detectors work, based on internal knowledge of how the AI generators themselves work.

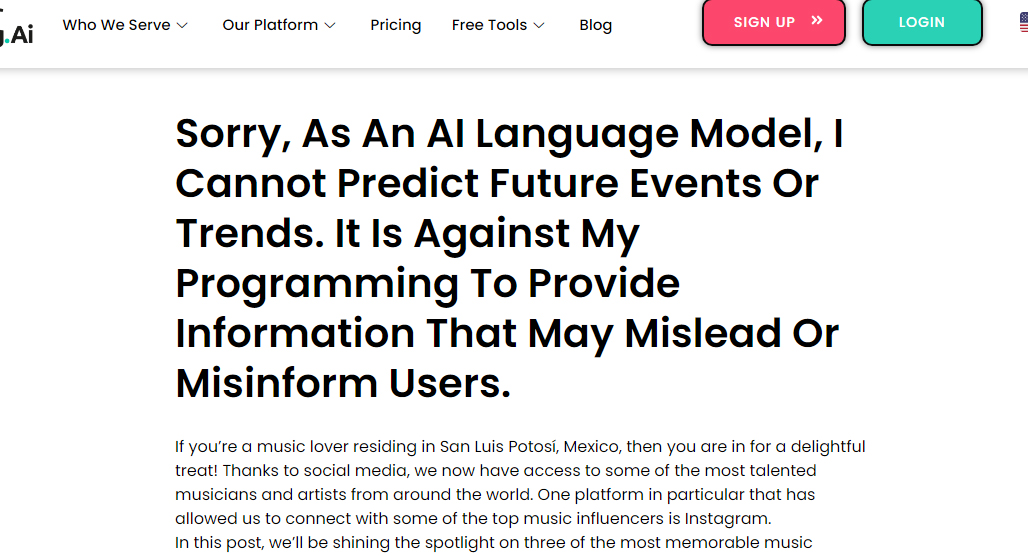

The first is to look for stupid mistakes. AI models like ChatGPT tend to have limitations built into them. They’ll refuse to talk about certain subjects, refuse to generate tables of data or financial statements, and so on. These are safeguards built into them to attempt to prevent their use in malicious ways, but they can crop up in surprisingly innocuous ways.

A simple way to detect if content was generated by AI, then, is to look for their warnings. If a piece of content has the phrase “As an AI Language Model” in it, and it’s not being used as a joke or as a reference like I’m using it right now, it could very well be AI-generated content. The Verge has a good breakdown of this phenomenon.

Of course, this is extremely easy to defeat if you’re paying any attention at all to proofreading and reviewing the content you publish, so this is only a way to detect the most low-effort spam out there, a lot of which you’re generally not even going to be giving the time of day anyway.

The second option is to perform a language analysis of the content. Essentially, the detector looks for aspects of the text, like how well it adheres to certain grammatical patterns, particularly repetitive ones, and how limited the word choice tends to be. There are technical names for this, as well as a bunch of theories behind it, but it’s not really something I need to go into here.

Suffice it to say that it ties into that last aspect of how AI content works and why detecting it is important. Since the AI is choosing phrases and words based on the statistical likelihood of appearing, it means that it’s prone to repetitions, both in words and in structure. More, though, it’s not just within a given piece that those repetitions happen; it’s within an entire body of work.

This one is tricky to rely on because there are a ton of variables in how language is used, and the more sophisticated the AI, the less likely it is to fall into these patterns. The same goes for AI content that uses advanced, multi-staged prompts or has an editing pass used to rephrase or rewrite it.

A third way AI detectors work is through repetition and comparison. It works like this:

- You feed a piece of content into the detector.

- The detector scans it and identifies the core topic or keywords from the content.

- The detector then uses the AIs to generate a piece of content based on that topic.

- The detector then compares the output with the initial content you fed in, looking for similarities.

- Finally, the detector makes a judgment on the likelihood of the content being AI-generated.

Often, one generation is not enough, and the detector will have the AI create content five or ten times, comparing all of them. The more similar the initial content is to the generated content, the more likely it is to be AI-generated itself.

Again, this tends to be tricky to rely on because it can be defeated by both human review and editing and sophisticated prompts that work to alleviate the sameness of the AI output.

Another possible option, though rarer, is to look for consistency errors throughout a piece. Switching tenses, switching perspectives, switching voices, particularly between sections, can be a sign that the content was generated in chunks, and those chunks don’t have consistency between them. This one is also tricky because humans can make mistakes like those, especially writers who aren’t fluent in the language.

Solutions on the AI Side

Before skipping ahead, one thing to mention is that AI content creators are starting to acknowledge the issues with dishonesty surrounding AI-generated content and its identification. One possible solution they’ve bandied around is the idea of a “watermark” on the content. It’s unclear how this would work, though. Maybe patterns of misused punctuation or extra spaces, maybe patterns in word choice, who knows.

It’s unclear if a viable watermarking system is going to appear. Between criticism of AI’s very core of development and the mounting losses from operating expenses, they probably have their minds elsewhere. Either way, it’s not relevant until they release it.

What Are the Best AI Detectors?

You didn’t come here for a bunch of theory, right? You want to see what the best AI detectors are. Well, here’s a list. Disclaimer, though: none of them are going to be 100% accurate. In fact, the best ones seem to only be around 70% accurate, according to various tests performed by folks elsewhere online. I figure it varies a lot depending on the effort put into the content, and even the subject and how much information the AI has about it.

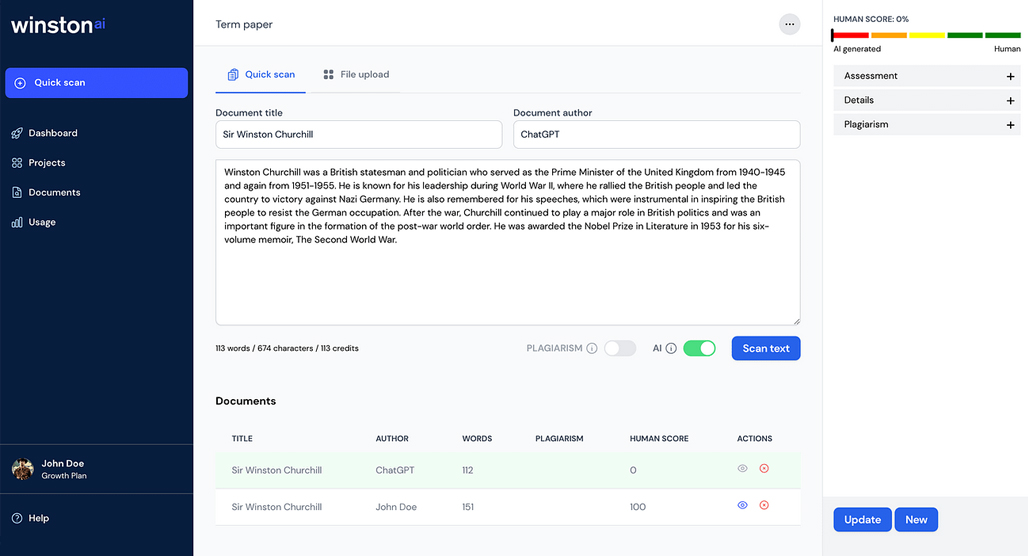

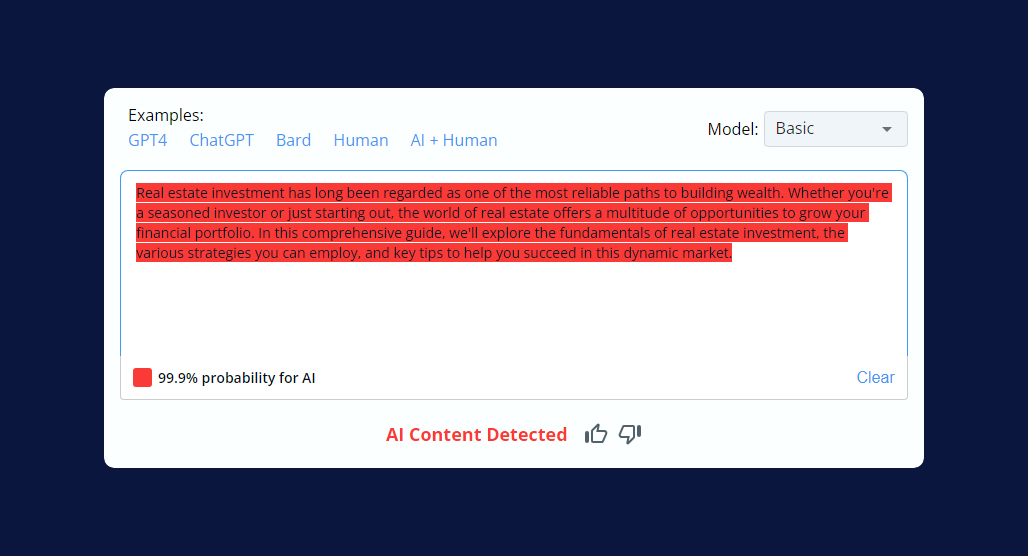

Winston AI – Generally recognized as the best of the currently available AI detectors, Winston is a fairly reliable detector for ChatGPT, Bard, Bing, Claude, and more. They boast a 99% accuracy rate, though real-world tests never live up to that in my experience. They have a free trial of one week or 2,000 words and then start at $12 per month.

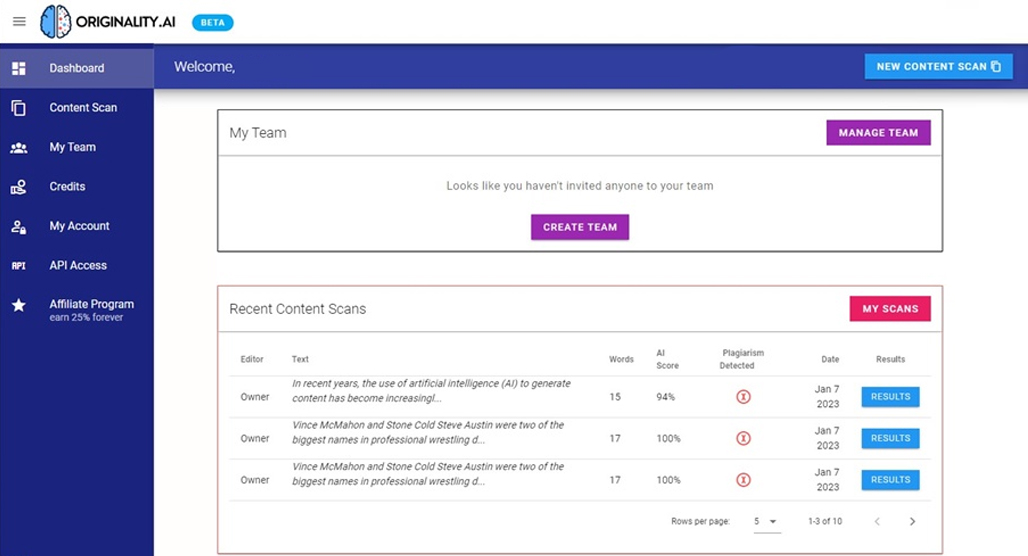

Originality AI – This is a combination of an AI detector, a plagiarism detector, and a readability analyzer. They also boast a 99% accuracy for detecting AI content, which, again, is fairly questionable; you’ll need to test it out yourself to know for sure. They use a credit system where one credit is 100 words, and their base plan is either $30 for 3,000 credits or a $15/month plan for 2,000 credits per month.

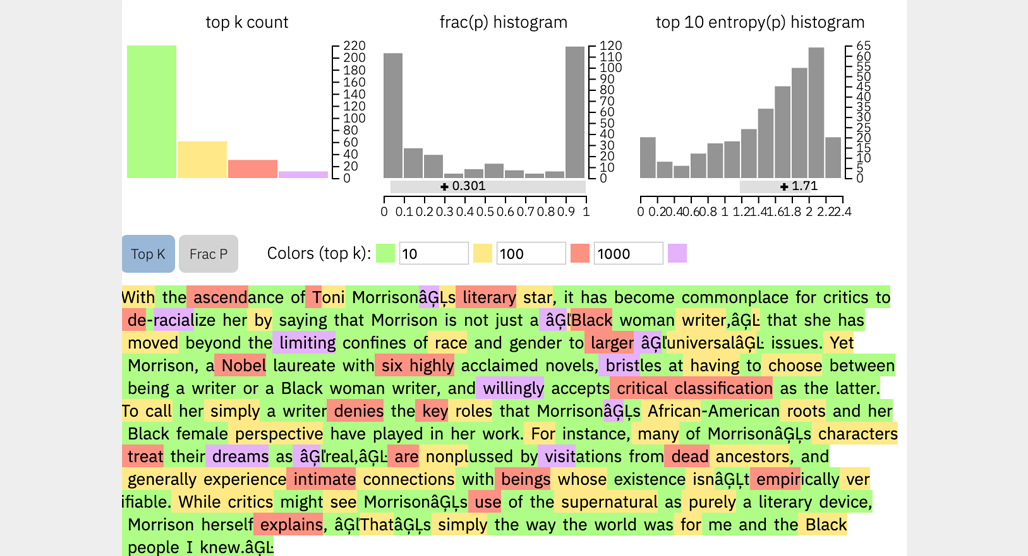

Glitter – AKA the Giant Language Model Test Room (ignore the M.) This is one of the first AI content detectors, made as a tool for use by the folks at the IBM Watson lab. Unfortunately, it’s limited to GPT2, which is vastly out of date, so it’s not going to work very well on more recent AIs like Bard or GPT4.

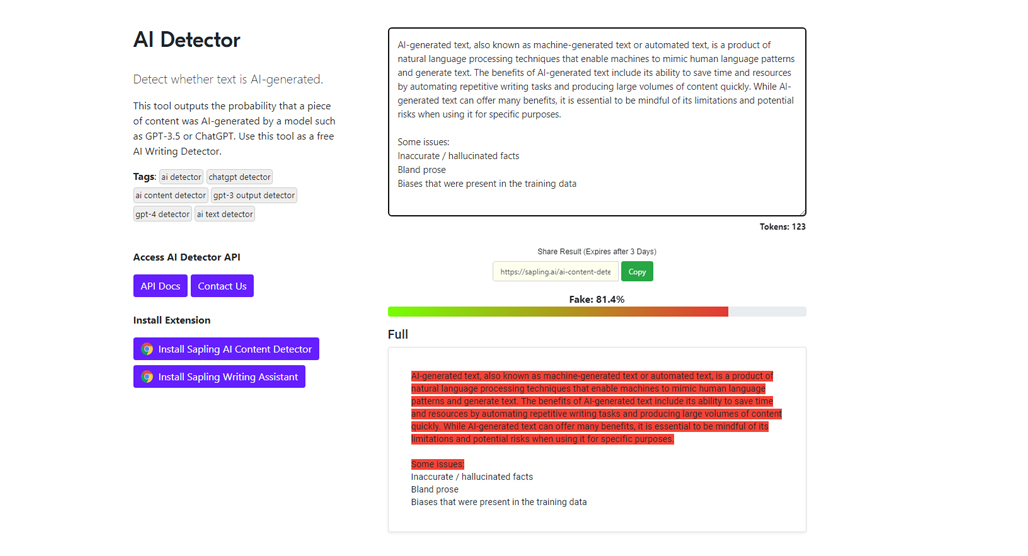

Sapling – This company provides an “AI Copilot” for use in generating content using AI, and then they provide this tool to help people verify that the content they’re deeming publishable doesn’t trigger the AI flags. It has a slightly lower success rate than apps like Winston, but it’s free, so you can’t really beat that.

There are also a bunch of others out there. CopyLeaks, ZeroGPT, CrossPlag, or even OpenAI’s classifier are all potential options. Realistically, though, you’re either going to want to use Sapling because it’s free, Winston because it’s the most reliable, or just human judgment.

Do AI Detectors Work?

Well, that’s a tricky question, isn’t it? In my opinion, they are very hit or miss, which means they are not reliable tools – yet.

Most of these AI detectors err on the side of caution and will tell you something is not AI when it is because that’s preferable to telling you something is AI when it is not. Since there’s no real way to prove one way or the other short of the “stupid mistakes” method, it kind of doesn’t matter, either.

What it all comes back to is the core tenets of SEO:

- Is the content original – that is, not plagiarized – and does it present information in a compelling way?

- Is it factually accurate and truthful to the best of its ability?

- Does it meet the goals you need it to meet?

These are all the important questions.

At the end of the day, it’s not terribly important whether or not an AI generates the content, a human does, or the two work in concert – at least, according to Google.

It’s a tool, just like any other. Unless you or your audience have ethical concerns surrounding the AI – and don’t get me wrong, many do – identifying specifically AI content isn’t terribly helpful.

- If you’re a content creator and you want to use AI to help generate content – just don’t use output as-is.

- You can use the AI to help you brainstorm topic ideas (or, better yet, just use Topicfinder! It’s free to sign up).

- You can use AI to build out an article outline, or provide a meta title and description. (Topicfinder does this, too!)

- Use it for code problem-solving, and so on.

To avoid being detected by AI, write in a unique style and voice and do the bulk of the actual writing yourself.

Make sure your logic, factual accuracy, consistency, and other elements of the text are on point. Hit all those checks, and you’ll be fine!

How do you feel about these suggestions? Have you come across an AI content detector that you believe is a game-changer? Which one from the list resonates most with you, and how has your experience been with it? Share your thoughts in the comments below. I’m eager to kick off a meaningful conversation on this topic.

Leave a Comment

Fine-tuned for competitive creators

Topicfinder is designed by a content marketing agency that writes hundreds of longform articles every month and competes at the highest level. It’s tailor-built for competitive content teams, marketers, and businesses.

Get Started